Virginia Commonwealth University – virtual workshops. Make Your Own Zoom Background, Streaming Media, Getting Started with Character Animator Video Games University of Tennessee at Chattanooga – Research Guides.

#Lens studio snapchat blender how to

Creating Videos, Intro to Video Production, Captioning with Adobe Premiere Pro, How to Add Video Clips and Trim Videos in Premiere Rush, Faking a Zoom Green Screen Adobe Premiere for Artists, Video Editing for Social Media, Becoming a YouTuber, Tips and Tricks for Livestreaming, Video Captioning, Optimizing your Smartphones for Audio/Video Memes + Misinformation, Collaborative Multimedia Tools Microsoft PowerPoint Basics, Microsoft Excel Basics, Microsoft Word Basics Miscellaneous 3D Modeling in Fusion 360, Getting Started with SketchUp, Getting Started with Creating SVGs for Laser Cutting Microsoft Office and Google Docs 3D Collage with Blender, Making Face Masks at Home Intro to Canva, Make Posters in PowerPoint, Crash Course in Graphic Design Makerspace Audio for Podcasting, DIY at Home Recording Graphic Design How to Write a Podcast, Optimizing your Smartphones for Audio/Video Currently, a base knowledge of Python is required to access the functions in the datasets.Here are some of the resources, videos, and guides that ACTAL members have contributed to our shared resource space.

Reliable gesture recognition continues to be a challenge in developing interactive experiences, and the research into Machine Learning will become increasingly important to our work. Machine Learning motion tracking libraries and datasets such as COCO (Microsoft Common Objects in Context), MPII Human Pose, JHMDB (Joint-annotated Human Motion Data Base), LSP (Leeds Sports Pose), and others will become more reliable, easier to set up, and read data into game engines such as Unity. This is particularly challenging for the optical motion capture suits, where markers become occluded by props, other actors, or even the floor. The data is processed, and often “cleaned” by artists, removing noisy data, and adding missing motion for the gaps in the data. The camera data is triangulated, and the locations of the spheres are reconstructed in virtual space, In other systems, special suits are worn, with embedded sensors that use accelerometers and gyros to track the wearer’s movement. A complete VFX or game studio motion tracking system requires dozens of infrared cameras, placed around the capture space, and the subject needs to be covered in reflective balls. While the research into AR, particularly body segmentation is active and improving with each iteration, there are still some challenges with the technology. However, for smaller experiments, these tools prove extremely valuable for the entry level to our production pipeline. This takes a great deal more resources to integrate into our larger experiences, but allow for more flexibility and scale than these consumer level tools. We use threeJS, MediaPipe, and many of the libraries available to identify and track body parts, finger and facial features.

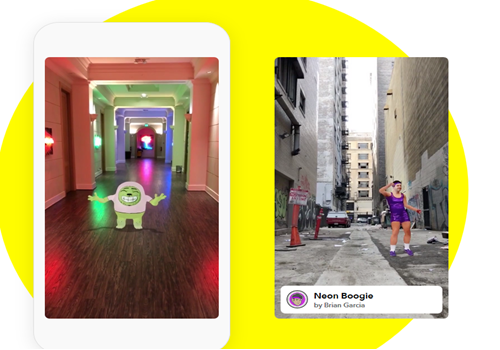

Once the client approves these initial “proof of concepts”, work begins on developing a fully-functional tool. Much of the underlying methods are in a “black box” in tools such as Lens Studio and Meta’s tools.

0 kommentar(er)

0 kommentar(er)